-

February 7, 2016The Error ModelTweet

Midori was written in an ahead-of-time compiled, type-safe language based on C#. Aside from our microkernel, the whole system was written in it, including drivers, the domain kernel, and all user code. I’ve hinted at a few things along the way and now it’s time to address them head-on. The entire language is a huge space to cover and will take a series of posts. First up? The Error Model. The way errors are communicated and dealt with is fundamental to any language, especially one used to write a reliable operating system. Like many other things we did in Midori, a “whole system” approach was necessary to getting it right, taking several iterations over several years. I regularly hear from old teammates, however, that this is the thing they miss most about programming in Midori. It’s right up there for me too. So, without further ado, let’s start.

Introduction

The basic question an Error Model seeks to answer is: how do “errors” get communicated to programmers and users of the system? Pretty simple, no? So it seems.

One of the biggest challenges in answering this question turns out to be defining what an error actually is. Most languages lump bugs and recoverable errors into the same category, and use the same facilities to deal with them. A

nulldereference or out-of-bounds array access is treated the same way as a network connectivity problem or parsing error. This consistency may seem nice at first glance, but it has deep-rooted issues. In particular, it is misleading and frequently leads to unreliable code.Our overall solution was to offer a two-pronged error model. On one hand, you had fail-fast – we called it abandonment – for programming bugs. And on the other hand, you had statically checked exceptions for recoverable errors. The two were very different, both in programming model and the mechanics behind them. Abandonment unapologetically tore down the entire process in an instant, refusing to run any user code while doing so. (Remember, a typical Midori program had many small, lightweight processes.) Exceptions, of course, facilitated recovery, but had deep type system support to aid checking and verification.

This journey was long and winding. To tell the tale, I’ve broken this post into six major areas:

- Ambitions and Learnings

- Bugs Aren’t Recoverable Errors!

- Reliability, Fault-Tolerance, and Isolation

- Bugs: Abandonment, Assertions, and Contracts

- Recoverable Errors: Type-Directed Exceptions

- Retrospective and Conclusions

In hindsight, certain outcomes seem obvious. Especially given modern systems languages like Go and Rust. But some outcomes surprised us. I’ll cut to the chase wherever I can but I’ll give ample back-story along the way. We tried out plenty of things that didn’t work, and I suspect that’s even more interesting than where we ended up when the dust settled.

Ambitions and Learnings

Let’s start by examining our architectural principles, requirements, and learnings from existing systems.

Principles

As we set out on this journey, we called out several requirements of a good Error Model:

-

Usable. It must be easy for developers to do the “right” thing in the face of error, almost as if by accident. A friend and colleague famously called this falling into the The Pit of Success. The model should not impose excessive ceremony in order to write idiomatic code. Ideally it is cognitively familiar to our target audience.

-

Reliable. The Error Model is the foundation of the entire system’s reliability. We were building an operating system, after all, so reliability was paramount. You might even have accused us as obsessively pursuing extreme levels of it. Our mantra guiding much of the programming model development was “correct by construction.”

-

Performant. The common case needs to be extremely fast. That means as close to zero overhead as possible for success paths. Any added costs for failure paths must be entirely “pay-for-play.” And unlike many modern systems that are willing to overly penalize error paths, we had several performance-critical components for which this wasn’t acceptable, so errors had to be reasonably fast too.

-

Concurrent. Our entire system was distributed and highly concurrent. This raises concerns that are usually afterthoughts in other Error Models. They needed to be front-and-center in ours.

-

Diagnosable. Debugging failures, either interactively or after-the-fact, needs to be productive and easy.

-

Composable. At the core, the Error Model is a programming language feature, sitting at the center of a developer’s expression of code. As such, it had to provide familiar orthogonality and composability with other features of the system. Integrating separately authored components had to be natural, reliable, and predictable.

It’s a bold claim, however I do think what we ended up with succeeded across all dimensions.

Learnings

Existing Error Models didn’t meet the above requirements for us. At least not fully. If one did well on a dimension, it’d do poorly at another. For instance, error codes can have good reliability, but many programmers find them error prone to use; further, it’s easy to do the wrong thing – like forget to check one – which clearly violates the “pit of success” requirement.

Given the extreme level of reliability we sought, it’s little surprise we were dissatisfied with most models.

If you’re optimizing for ease-of-use over reliability, as you might in a scripting language, your conclusions will differ significantly. Languages like Java and C# struggle because they are right at the crossroads of scenarios – sometimes being used for systems, sometimes being used for applications – but overall their Error Models were very unsuitable for our needs.

Finally, also recall that this story began in the mid-2000s timeframe, before Go, Rust, and Swift were available for our consideration. These three languages have done some great things with Error Models since then.

Error Codes

Error codes are arguably the simplest Error Model possible. The idea is very basic and doesn’t even require language or runtime support. A function just returns a value, usually an integer, to indicate success or failure:

int foo() { // <try something here> if (failed) { return 1; } return 0; }This is the typical pattern, where a return of

0means success and non-zero means failure. A caller must check it:int err = foo(); if (err) { // Error! Deal with it. }Most systems offer constants representing the set of error codes rather than magic numbers. There may or may not be functions you can use to get extra information about the most recent error (like

errnoin standard C andGetLastErrorin Win32). A return code really isn’t anything special in the language – it’s just a return value.C has long used error codes. As a result, most C-based ecosystems do. More low-level systems code has been written using the return code discipline than any other. Linux does, as do countless mission-critical and realtime systems. So it’s fair to say they have an impressive track record going for them!

On Windows,

HRESULTs are equivalent. AnHRESULTis just an integer “handle” and there are a bunch of constants and macros inwinerror.hlikeS_OK,E_FAULT, andSUCCEEDED(), that are used to create and check values. The most important code in Windows is written using a return code discipline. No exceptions are to be found in the kernel. At least not intentionally.In environments with manual memory management, deallocating memory on error is uniquely difficult. Return codes can make this (more) tolerable. C++ has more automatic ways of doing this using RAII, but unless you buy into the C++ model whole hog – which a fair number of systems programmers don’t – then there’s no good way to incrementally use RAII in your C programs.

More recently, Go has chosen error codes. Although Go’s approach is similar to C’s, it has been modernized with much nicer syntax and libraries.

Many functional languages use return codes disguised in monads and named things like

Option<T>,Maybe<T>, orError<T>, which, when coupled with a dataflow-style of programming and pattern matching, feel far more natural. This approach removes several major drawbacks to return codes that we’re about to discuss, especially compared to C. Rust has largely adopted this model but has dome some exciting things with it for systems programmers.Despite their simplicity, return codes do come with some baggage; in summary:

- Performance can suffer.

- Programming model usability can be poor.

- The biggie: You can accidentally forget to check for errors.

Let’s discuss each one, in order, with examples from the languages cited above.

Performance

Error codes fail the test of “zero overhead for common cases; pay for play for uncommon cases”:

-

There is calling convention impact. You now have two values to return (for non-

voidreturning functions): the actual return value and the possible error. This burns more registers and/or stack space, making calls less efficient. Inlining can of course help to recover this for the subset of calls that can be inlined. -

There are branches injected into callsites anywhere a callee can fail. I call costs like this “peanut butter,” because the checks are smeared across the code, making it difficult to measure the impact directly. In Midori we were able to experiment and measure, and confirm that yes, indeed, the cost here is nontrivial. There is also a secondary effect which is, because functions contain more branches, there is more risk of confusing the optimizer.

This might be surprising to some people, since undoubtedly everyone has heard that “exceptions are slow.” It turns out that they don’t have to be. And, when done right, they get error handling code and data off hot paths which increases I-cache and TLB performance, compared to the overheads above, which obviously decreases them.

Many high performance systems have been built using return codes, so you might think I’m nitpicking. As with many things we did, an easy criticism is that we took too extreme an approach. But the baggage gets worse.

Forgetting to Check Them

It’s easy to forget to check a return code. For example, consider a function:

int foo() { ... }Now at the callsite, what if we silently ignore the returned value entirely, and just keep going?

foo(); // Keep going -- but foo might have failed!At this point, you’ve masked a potentially critical error in your program. This is easily the most vexing and damaging problem with error codes. As I will show later, option types help to address this for functional languages. But in C-based languages, and even Go with its modern syntax, this is a real issue.

This problem isn’t theoretical. I’ve encountered numerous bugs caused by ignoring return codes and I’m sure you have too. Indeed, in the development of this very Error Model, my team encountered some fascinating ones. For example, when we ported Microsoft’s Speech Server to Midori, we found that 80% of Taiwan Chinese (

zh-tw) requests were failing. Not failing in a way the developers immediately saw, however; instead, clients would get a gibberish response. At first, we thought it was our fault. But then we discovered a silently swallowedHRESULTin the original code. Once we got it over to Midori, the bug was thrown into our faces, found, and fixed immediately after porting. This experience certainly informed our opinion about error codes.It’s surprising to me that Go made unused

imports an error, and yet missed this far more critical one. So close!It’s true you can add a static analysis checker, or maybe an “unused return value” warning as most commercial C++ compilers do. But once you’ve missed the opportunity to add it to the core of the language, as a requirement, none of those techniques will reach critical mass due to complaints about noisy analysis.

For what it’s worth, forgetting to use return values in our language was a compile time error. You had to explicitly ignore them; early on we used an API for this, but eventually devised language syntax – the equivalent of

>/dev/null:ignore foo();We didn’t use error codes, however the inability to accidentally ignore a return value was important for the overall reliability of the system. How many times have you debugged a problem only to find that the root cause was a return value you forgot to use? There have even been security exploits where this was the root cause. Letting developers say

ignorewasn’t bulletproof, of course, as they could still do the wrong thing. But it was at least explicit and auditable.Programming Model Usability

In C-based languages with error codes, you end up writing lots of hand-crafted

ifchecks everywhere after function calls. This can be especially tedious if many of your functions fail which, in C programs where allocation failures are also communicated with return codes, is frequently the case. It’s also clumsy to return multiple values.A warning: this complaint is subjective. In many ways, the usability of return codes is actually elegant. You reuse very simple primitives – integers, returns, and

ifbranches – that are used in myriad other situations. In my humble opinion, errors are an important enough aspect of programming that the language should be helping you out.Go has a nice syntactic shortcut to make the standard return code checking slightly more pleasant:

if err := foo(); err != nil { // Deal with the error. }Notice that we’ve invoked

fooand checked whether the error is non-nilin one line. Pretty neat.The usability problems don’t stop there, however.

It’s common that many errors in a given function should share some recovery or remediation logic. Many C programmers use labels and

gotos to structure such code. For example:int error; // ... error = step1(); if (error) { goto Error; } // ... error = step2(); if (error) { goto Error; } // ... // Normal function exit. return 0; // ... Error: // Do something about `error`. return error;Needless to say, this is the kind of code only a mother could love.

In languages like D, C#, and Java, you have

finallyblocks to encode this “before scope exits” pattern more directly. Similarly, Microsoft’s proprietary extensions to C++ offer__finally, even if you’re not fully buying into RAII and exceptions. And D providesscopeand Go offersdefer. All of these help to eradicate thegoto Errorpattern.Next, imagine my function wants to return a real value and the possibility of an error? We’ve burned the return slot already so there are two obvious possibilities:

-

We can use the return slot for one of the two values (commonly the error), and another slot – like a pointer parameter – for the other of the two (commonly the real value). This is the common approach in C.

-

We can return a data structure that carries the possibility of both in its very structure. As we will see, this is common in functional languages. But in a language like C, or Go even, that lacks parametric polymorphism, you lose typing information about the returned value, so this is less common to see. C++ of course adds templates, so in principle it could do this, however because it adds exceptions, the ecosystem around return codes is lacking.

In support of the performance claims above, imagine what both of these do to your program’s resulting assembly code.

Returning Values “On The Side”

An example of the first approach in C looks like this:

int foo(int* out) { // <try something here> if (failed) { return 1; } *out = 42; return 0; }The real value has to be returned “on the side,” making callsites clumsy:

int value; int ret = foo(&value); if (ret) { // Error! Deal with it. } else { // Use value... }In addition to being clumsy, this pattern perturbs your compiler’s definite assignment analysis which impairs your ability to get good warnings about things like using uninitialized values.

Go also takes aim at this problem with nicer syntax, thanks to multi-valued returns:

func foo() (int, error) { if failed { return 0, errors.New("Bad things happened") } return 42, nil }And callsites are much cleaner as a result. Combined with the earlier feature of single-line

ifchecking for errors – a subtle twist, since at first glance thevaluereturn wouldn’t be in scope, but it is – this gets a touch nicer:if value, err := foo(); err != nil { // Error! Deal with it. } else { // Use value ... }Notice that this also helps to remind you to check the error. It’s not bulletproof, however, because functions can return an error and nothing else, at which point forgetting to check it is just as easy as it is in C.

As I mentioned above, some would argue against me on the usability point. Especially Go’s designers, I suspect. A big appeal to Go using error codes is as a rebellion against the overly complex languages in today’s landscape. We have lost a lot of what makes C so elegant – that you can usually look at any line of code and guess what machine code it translates into. I won’t argue against these points. In fact, I vastly prefer Go’s model over both unchecked exceptions and Java’s incarnation of checked exceptions. Even as I write this post, having written lots of Go lately, I look at Go’s simplicity and wonder, did we go too far with all the

tryandrequiresand so on that you’ll see shortly? I’m not sure. Go’s error model tends to be one of the most divisive aspect of the language; it’s probably largely because you can’t be sloppy with errors as in most languages, however programmers really did enjoy writing code in Midori’s. In the end, it’s hard to compare them. I’m convinced both can be used to write reliable code.Return Values in Data Structures

Functional languages address many of the usability challenges by packaging up the possibility of either a value or an error, into a single data structure. Because you’re forced to pick apart the error from the value if you want to do anything useful with the value at the callsite – which, thanks to a dataflow style of programming, you probably will – it’s easy to avoid the killer problem of forgetting to check for errors.

For an example of a modern take on this, check out Scala’s

Optiontype. The unfortunate news is that some languages, like those in the ML family and even Scala (thanks to its JVM heritage), mix this elegant model with the world of unchecked exceptions. This taints the elegance of the monadic data structure approach.Haskell does something even cooler and gives the illusion of exception handling while still using error values and local control flow:

There is an old dispute between C++ programmers on whether exceptions or error return codes are the right way. Niklas Wirth considered exceptions to be the reincarnation of GOTO and thus omitted them in his languages. Haskell solves this problem a diplomatic way: Functions return error codes, but the handling of error codes does not uglify the code.

The trick here is to support all the familiar

throwandcatchpatterns, but using monads rather than control flow.Although Rust also uses error codes it is also in the style of the functional error types. For example, imagine we are writing a function named

barin Go: we’d like to callfoo, and then simply propagate the error to our caller if it fails:func bar() error { if value, err := foo(); err != nil { return err } else { // Use value ... } }The longhand version in Rust isn’t any more concise. It might, however, send C programmers reeling with its foreign pattern matching syntax (a real concern but not a dealbreaker). Anyone comfortable with functional programming, however, probably won’t even blink, and this approach certainly serves as a reminder to deal with your errors:

fn bar() -> Result<(), Error> { match foo() { Ok(value) => /* Use value ... */, Err(err) => return Err(err) } }But it gets better. Rust has a

try!macro that reduces boilerplate like the most recent example to a single expression:fn bar() -> Result<(), Error> { let value = try!(foo); // Use value ... }This leads us to a beautiful sweet spot. It does suffer from the performance problems I mentioned earlier, but does very well on all other dimensions. It alone is an incomplete picture – for that, we need to cover fail-fast (a.k.a. abandonment) – but as we will see, it’s far better than any other exception-based model in widespread use today.

Exceptions

The history of exceptions is fascinating. During this journey I spent countless hours retracing the industry’s steps. That includes reading some of the original papers – like Goodenough’s 1975 classic, Exception Handling: Issues and a Proposed Notation – in addition to looking at the approaches of several languages: Ada, Eiffel, Modula-2 and 3, ML, and, most inspirationally, CLU. Many papers do a better job than I can summarizing the long and arduous journey, so I won’t do that here. Instead, I’ll focus on what works and what doesn’t work for building reliable systems.

Reliability is the most important of our requirements above when developing the Error Model. If you can’t react appropriately to failures, your system, by definition, won’t be very reliable. Operating systems generally speaking need to be reliable. Sadly, the most commonplace model – unchecked exceptions – is the worst you can do in this dimension.

For these reasons, most reliable systems use return codes instead of exceptions. They make it possible to locally reason about and decide how best to react to error conditions. But I’m getting ahead of myself. Let’s dig in.

Unchecked Exceptions

A quick recap. In an unchecked exceptions model, you

throwandcatchexceptions, without it being part of the type system or a function’s signature. For example:// Foo throws an unhandled exception: void Foo() { throw new Exception(...); } // Bar calls Foo, and handles that exception: void Bar() { try { Foo(); } catch (Exception e) { // Handle the error. } } // Baz also calls Foo, but does not handle that exception: void Baz() { Foo(); // Let the error escape to our callers. }In this model, any function call – and sometimes any statement – can throw an exception, transferring control non-locally somewhere else. Where? Who knows. There are no annotations or type system artifacts to guide your analysis. As a result, it’s difficult for anyone to reason about a program’s state at the time of the throw, the state changes that occur while that exception is propagated up the call stack – and possibly across threads in a concurrent program – and the resulting state by the time it gets caught or goes unhandled.

It’s of course possible to try. Doing so requires reading API documentation, doing manual audits of the code, leaning heavily on code reviews, and a healthy dose of luck. The language isn’t helping you out one bit here. Because failures are rare, this tends not to be as utterly disastrous as it sounds. My conclusion is that’s why many people in the industry think unchecked exceptions are “good enough.” They stay out of your way for the common success paths and, because most people don’t write robust error handling code in non-systems programs, throwing an exception usually gets you out of a pickle fast. Catching and then proceeding often works too. No harm, no foul. Statistically speaking, programs “work.”

Maybe statistical correctness is okay for scripting languages, but for the lowest levels of an operating system, or any mission critical application or service, this isn’t an appropriate solution. I hope this isn’t controversial.

.NET makes a bad situation even worse due to asynchronous exceptions. C++ has so-called “asynchronous exceptions” too: these are failures that are triggered by hardware faults, like access violations. It gets really nasty in .NET, however. An arbitrary thread can inject a failure at nearly any point in your code. Even between the RHS and LHS of an assignment! As a result, things that look atomic in source code aren’t. I wrote about this 10 years ago and the challenges still exist, although the risk has lessened as .NET generally learned that thread aborts are problematic. The new CoreCLR even lacks AppDomains, and the new ASP.NET Core 1.0 stack certainly doesn’t use thread aborts like it used to. But the APIs are still there.

There’s a famous interview with Anders Hejlsberg, C#’s chief designer, called The Trouble with Checked Exceptions. From a systems programmer’s perspective, much of it leaves you scratching your head. No statement affirms that the target customer for C# was the rapid application developer more than this:

Bill Venners: But aren’t you breaking their code in that case anyway, even in a language without checked exceptions? If the new version of foo is going to throw a new exception that clients should think about handling, isn’t their code broken just by the fact that they didn’t expect that exception when they wrote the code?

Anders Hejlsberg : No, because in a lot of cases, people don’t care. They’re not going to handle any of these exceptions. There’s a bottom level exception handler around their message loop. That handler is just going to bring up a dialog that says what went wrong and continue. The programmers protect their code by writing try finally’s everywhere, so they’ll back out correctly if an exception occurs, but they’re not actually interested in handling the exceptions.

This reminds me of

On Error Resume Nextin Visual Basic, and the way Windows Forms automatically caught and swallowed errors thrown by the application, and attempted to proceed. I’m not blaming Anders for his viewpoint here; heck, for C#’s wild popularity, I’m convinced it was the right call given the climate at the time. But this sure isn’t the way to write operating system code.C++ at least tried to offer something better than unchecked exceptions with its

throwexception specifications. Unfortunately, the feature relied on dynamic enforcement which sounded its death knell instantaneously.If I write a function

void f() throw(SomeError), the body offis still free to invoke functions that throw things other thanSomeError. Similarly, if I state thatfthrows no exceptions, usingvoid f() throw(), it’s still possible to invoke things that throw. To implement the stated contract, therefore, the compiler and runtime must ensure that, should this happen,std::unexpectedis called to rip the process down in response.I’m not the only person to recognize this design was a mistake. Indeed,

throwis now deprecated. A detailed WG21 paper, Deprecating Exception Specifications, describes how C++ ended up here, and has this to offer in its opening statement:Exception specifications have proven close to worthless in practice, while adding a measurable overhead to programs.

The authors list three reasons for deprecating

throw. Two of the three reasons were a result of the dynamic choice: runtime checking (and its associated opaque failure mode) and runtime performance overheads. The third reason, lack of composition in generic code, could have been dealt with using a proper type system (admittedly at an expense).But the worst part is that the cure relies on yet another dynamically enforced construct – the

noexceptspecifier – which, in my opinion, is just as bad as the disease.“Exception safety” is a commonly discussed practice in the C++ community. This approach neatly classifies how functions are intended to behave from a caller’s perspective with respect to failure, state transitions, and memory management. A function falls into one of four kinds: no-throw means forward progress is guaranteed and no exceptions will emerge; strong safety means that state transitions happen atomically and a failure will not leave behind partially committed state or broken invariants; basic safety means that, though a function might partially commit state changes, invariants will not be broken and leaks are prevented; and finally, no safety means anything’s possible. This taxonomy is quite helpful and I encourage anyone to be intentional and rigorous about error behavior, either using this approach or something similar. Even if you’re using error codes. The problem is, it’s essentially impossible to follow these guidelines in a system using unchecked exceptions, except for leaf node data structures that call a small and easily auditable set of other functions. Just think about it: to guarantee strong safety everywhere, you would need to consider the possibility of all function calls throwing, and safeguard the surrounding code accordingly. That either means programming defensively, trusting another function’s documented English prose (that isn’t being checked by a computer), getting lucky and only calling

noexceptfunctions, or just hoping for the best. Thanks to RAII, the leak-freedom aspect of basic safety is easier to attain – and pretty common these days thanks to smart pointers – but even broken invariants are tricky to prevent. The article Exception Handling: A False Sense of Security sums this up well.For C++, the real solution is easy to predict, and rather straightforward: for robust systems programs, don’t use exceptions. That’s the approach Embedded C++ takes, in addition to numerous realtime and mission critical guidelines for C++, including NASA’s Jet Propulsion Laboratory’s. C++ on Mars sure ain’t using exceptions anytime soon.

So if you can safely avoid exceptions and stick to C-like return codes in C++, what’s the beef?

The entire C++ ecosystem uses exceptions. To obey the above guidance, you must avoid significant parts of the language and, it turns out, significant chunks of the library ecosystem. Want to use the Standard Template Library? Too bad, it uses exceptions. Want to use Boost? Too bad, it uses exceptions. Your allocator likely throws

bad_alloc. And so on. This even causes insanity like people creating forks of existing libraries that eradicates exceptions. The Windows kernel, for instance, has its own fork of the STL that doesn’t use exceptions. This bifurcation of the ecosystem is neither pleasant nor practical to sustain.This mess puts us in a bad spot. Especially because many languages use unchecked exceptions. It’s clear that they are ill-suited for writing low-level, reliable systems code. (I’m sure I will make a few C++ enemies by saying this so bluntly.) After writing code in Midori for years, it brings me tears to go back and write code that uses unchecked exceptions; even simply code reviewing is torture. But “thankfully” we have checked exceptions from Java to learn and borrow from … Right?

Checked Exceptions

Ah, checked exceptions. The rag doll that nearly every Java programmer, and every person who’s observed Java from an arm’s length distance, likes to beat on. Unfairly so, in my opinion, when you compare it to the unchecked exceptions mess.

In Java, you know mostly what a method might throw, because a method must say so:

void foo() throws FooException, BarException { ... }Now a caller knows that invoking

foocould result in eitherFooExceptionorBarExceptionbeing thrown. At callsites a programmer must now decide: 1) propagate thrown exceptions as-is, 2) catch and deal with them, or 3) somehow transform the type of exception being thrown (possibly even “forgetting” the type altogether). For instance:// 1) Propagate exceptions as-is: void bar() throws FooException, BarException { foo(); } // 2) Catch and deal with them: void bar() { try { foo(); } catch (FooException e) { // Deal with the FooException error conditions. } catch (BarException e) { // Deal with the BarException error conditions. } } // 3) Transform the exception types being thrown: void bar() throws Exception { foo(); }This is getting much closer to something we can use. But it fails on a few accounts:

-

Exceptions are used to communicate unrecoverable bugs, like null dereferences, divide-by-zero, etc.

-

You don’t actually know everything that might be thrown, thanks to our little friend

RuntimeException. Because Java uses exceptions for all error conditions – even bugs, per above – the designers realized people would go mad with all those exception specifications. And so they introduced a kind of exception that is unchecked. That is, a method can throw it without declaring it, and so callers can invoke it seamlessly. -

Although signatures declare exception types, there is no indication at callsites what calls might throw.

-

People hate them.

That last one is interesting, and I shall return to it later when describing the approach Midori took. In summary, peoples’ distaste for checked exceptions in Java is largely derived from, or at least significantly reinforced by, the other three bullets above. The resulting model seems to be the worst of both worlds. It doesn’t help you to write bulletproof code and it’s hard to use. You end up writing down a lot of gibberish in your code for little perceived benefit. And versioning your interfaces is a pain in the ass. As we’ll see later, we can do better.

That versioning point is worth a ponder. If you stick to a single kind of

throw, then the versioning problem is no worse than error codes. Either a function fails or it doesn’t. It’s true that if you design version 1 of your API to have no failure mode, and then want to add failures in version 2, you’re screwed. As you should be, in my opinion. An API’s failure mode is a critical part of its design and contract with callers. Just as you wouldn’t change the return type of an API silently without callers needing to know, you shouldn’t change its failure mode in a semantically meaningful way. More on this controversial point later on.CLU has an interesting approach, as described in this crooked and wobbly scan of a 1979 paper by Barbara Liskov, Exception Handling in CLU. Notice that they focus a lot on “linguistics”; in other words, they wanted a language that people would love. The need to check and repropagate all errors at callsites felt a lot more like return values, yet the programming model had that richer and slightly declarative feel of what we now know as exceptions. And most importantly,

signals (their name forthrow) were checked. There were also convenient ways to terminate the program should an unexpectedsignaloccur.Universal Problems with Exceptions

Most exception systems get a few major things wrong, regardless of whether they are checked or unchecked.

First, throwing an exception is usually ridiculously expensive. This is almost always due to the gathering of a stack trace. In managed systems, gathering a stack trace also requires groveling metadata, to create strings of function symbol names. If the error is caught and handled, however, you don’t even need that information at runtime! Diagnostics are better implemented in the logging and diagnostics infrastructure, not the exception system itself. The concerns are orthogonal. Although, to really nail the diagnostics requirement above, something needs to be able to recover stack traces; never underestimate the power of

printfdebugging and how important stack traces are to it.Next, exceptions can significantly impair code quality. I touched on this topic in my last post, and there are good papers on the topic in the context of C++. Not having static type system information makes it hard to model control flow in the compiler, which leads to overly conservative optimizers.

Another thing most exception systems get wrong is encouraging too coarse a granularity of handling errors. Proponents of return codes love that error handling is localized to a specific function call. (I do too!) In exception handling systems, it’s all too easy to slap a coarse-grained

try/catchblock around some huge hunk of code, without carefully reacting to individual failures. That produces brittle code that’s almost certainly wrong; if not today, then after the inevitable refactoring that will occur down the road. A lot of this has to do with having the right syntaxes.Finally, control flow for

throws is usually invisible. Even with Java, where you annotate method signatures, it’s not possible to audit a body of code and see precisely where exceptions come from. Silent control flow is just as bad asgoto, orsetjmp/longjmp, and makes writing reliable code very difficult.Where Are We?

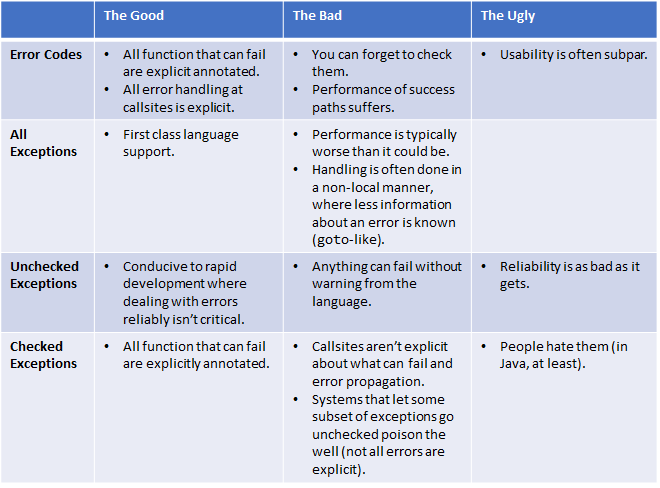

Before moving on, let’s recap where we are:

Wouldn’t it be great if we could take all of The Goods and leave out The Bads and The Uglies?

This alone would be a great step forward. But it’s insufficient. This leads me to our first big “ah-hah” moment that shaped everything to come. For a significant class of error, none of these approaches are appropriate!

Bugs Aren’t Recoverable Errors!

A critical distinction we made early on is the difference between recoverable errors and bugs:

-

A recoverable error is usually the result of programmatic data validation. Some code has examined the state of the world and deemed the situation unacceptable for progress. Maybe it’s some markup text being parsed, user input from a website, or a transient network connection failure. In these cases, programs are expected to recover. The developer who wrote this code must think about what to do in the event of failure because it will happen in well-constructed programs no matter what you do. The response might be to communicate the situation to an end-user, retry, or abandon the operation entirely, however it is a predictable and, frequently, planned situation, despite being called an “error.”

-

A bug is a kind of error the programmer didn’t expect. Inputs weren’t validated correctly, logic was written wrong, or any host of problems have arisen. Such problems often aren’t even detected promptly; it takes a while until “secondary effects” are observed indirectly, at which point significant damage to the program’s state might have occurred. Because the developer didn’t expect this to happen, all bets are off. All data structures reachable by this code are now suspect. And because these problems aren’t necessarily detected promptly, in fact, a whole lot more is suspect. Depending on the isolation guarantees of your language, perhaps the entire process is tainted.

This distinction is paramount. Surprisingly, most systems don’t make one, at least not in a principled way! As we saw above, Java, C#, and dynamic languages just use exceptions for everything; and C and Go use return codes. C++ uses a mixture depending on the audience, but the usual story is a project picks a single one and uses it everywhere. You usually don’t hear of languages suggesting two different techniques for error handling, however.

Given that bugs are inherently not recoverable, we made no attempt to try. All bugs detected at runtime caused something called abandonment, which was Midori’s term for something otherwise known as “fail-fast”.

Each of the above systems offers abandonment-like mechanisms. C# has

Environment.FailFast; C++ hasstd::terminate; Go haspanic; Rust haspanic!; and so on. Each rips down the surrounding context abruptly and promptly. The scope of this context depends on the system – for example, C# and C++ terminate the process, Go the current Goroutine, and Rust the current thread, optionally with a panic handler attached to salvage the process.Although we did use abandonment in a more disciplined and ubiquitous way than is common, we certainly weren’t the first to recognize this pattern. This Haskell essay, articulates this distinction quite well:

I was involved in the development of a library that was written in C++. One of the developers told me that the developers are divided into the ones who like exceptions and the other ones who prefer return codes. As it seem to me, the friends of return codes won. However, I got the impression that they debated the wrong point: Exceptions and return codes are equally expressive, they should however not be used to describe errors. Actually the return codes contained definitions like

ARRAY_INDEX_OUT_OF_RANGE. But I wondered: How shall my function react, when it gets this return code from a subroutine? Shall it send a mail to its programmer? It could return this code to its caller in turn, but it will also not know how to cope with it. Even worse, since I cannot make assumptions about the implementation of a function, I have to expect anARRAY_INDEX_OUT_OF_RANGEfrom every subroutine. My conclusion is thatARRAY_INDEX_OUT_OF_RANGEis a (programming) error. It cannot be handled or fixed at runtime, it can only be fixed by its developer. Thus there should be no according return code, but instead there should be asserts.Abandoning fine grained mutable shared memory scopes is suspect – like Goroutines or threads or whatever – unless your system somehow makes guarantees about the scope of the potential damage done. However, it’s great that these mechanisms are there for us to use! It means using an abandonment discipline in these languages is indeed possible.

There are architectural elements necessary for this approach to succeed at scale, however. I’m sure you’re thinking “If I tossed the entire process each time I had a null dereference in my C# program, I’d have some pretty pissed off customers”; and, similarly, “That wouldn’t be reliable at all!” Reliability, it turns out, might not be what you think.

Reliability, Fault-Tolerance, and Isolation

Before we get any further, we need to state a central belief:

ShiFailure Happens.To Build a Reliable System

Common wisdom is that you build a reliable system by systematically guaranteeing that failure can never happen. Intuitively, that makes a lot of sense. There’s one problem: in the limit, it’s impossible. If you can spend millions of dollars on this property alone – like many mission critical, realtime systems do – then you can make a significant dent. And perhaps use a language like SPARK (a set of contract-based extensions to Ada) to formally prove the correctness of each line written. However, experience shows that even this approach is not foolproof.

Rather than fighting this fact of life, we embraced it. Obviously you try to eliminate failures where possible. The error model must make them transparent and easy to deal with. But more importantly, you architect your system so that the whole remains functional even when individual pieces fail, and then teach your system to recover those failing pieces gracefully. This is well known in distributed systems. So why is it novel?

At the center of it all, an operating system is just a distributed network of cooperating processes, much like a distributed cluster of microservices or the Internet itself. The main differences include things like latency; what levels of trust you can establish and how easily; and various assumptions about locations, identity, etc. But failure in highly asynchronous, distributed, and I/O intensive systems is just bound to happen. My impression is that, largely because of the continued success of monolithic kernels, the world at large hasn’t yet made the leap to “operating system as a distributed system” insight. Once you do, however, a lot of design principles become apparent.

As with most distributed systems, our architecture assumed process failure was inevitable. We went to great length to defend against cascading failures, journal regularly, and to enable restartability of programs and services.

You build things differently when you go in assuming this.

In particular, isolation is critical. Midori’s process model encouraged lightweight fine-grained isolation. As a result, programs and what would ordinarily be “threads” in modern operating systems were independent isolated entities. Safeguarding against failure of one such connection is far easier than when sharing mutable state in an address space.

Isolation also encourages simplicity. Butler Lampson’s classic Hints on Computer System Design explores this topic. And I always loved this quote from Hoare:

The unavoidable price of reliability is simplicity. (C. Hoare).

By keeping programs broken into smaller pieces, each of which can fail or succeed on its own, the state machines within them stay simpler. As a result, recovering from failure is easier. In our language, the points of possible failure were explicit, further helping to keep those internal state machines correct, and pointing out those connections with the messier outside world. In this world, the price of individual failure is not nearly as dire. I can’t over-emphasize this point. None of the language features I describe later would have worked so well without this architectural foundation of cheap and ever-present isolation.

Erlang has been very successful at building this property into the language in a fundamental way. It, like Midori, leverages lightweight processes connected by message passing, and encourages fault-tolerant architectures. A common pattern is the “supervisor,” where some processes are responsible for watching and, in the event of failure, restarting other processes. This article does a terrific job articulating this philosophy – “let it crash” – and recommended techniques for architecting reliable Erlang programs in practice.

The key thing, then, is not preventing failure per se, but rather knowing how and when to deal with it.

Once you’ve established this architecture, you beat the hell out of it to make sure it works. For us, this meant week-long stress runs, where processes would come and go, some due to failures, to ensure the system as a whole kept making good forward progress. This reminds me of systems like Netflix’s Chaos Monkey which just randomly kills entire machines in your cluster to ensure the service as a whole stays healthy.

I expect more of the world to adopt this philosophy as the shift to more distributed computing happens. In a cluster of microservices, for example, the failure of a single container is often handled seamlessly by the enclosing cluster management software (Kubernetes, Amazon EC2 Container Service, Docker Swarm, etc). As a result, what I describe in this post is possibly helpful for writing more reliable Java, Node.js/JavaScript, Python, and even Ruby services. The unfortunate news is you’re likely going to be fighting your languages to get there. A lot of code in your process is going to work real damn hard to keep limping along when something goes awry.

Abandonment

Even when processes are cheap and isolated and easy to recreate, it’s still reasonable to think that abandoning an entire process in the face of a bug is an overreaction. Let me try to convince you otherwise.

Proceeding in the face of a bug is dangerous when you’re trying to build a robust system. If a programmer didn’t expect a given situation that’s arisen, who knows whether the code will do the right thing anymore. Critical data structures may have been left behind in an incorrect state. As an extreme (and possibly slightly silly) example, a routine that is meant to round your numbers down for banking purposes might start rounding them up.

And you might be tempted to whittle down the granularity of abandonment to something smaller than a process. But that’s tricky. To take an example, imagine a thread in your process encounters a bug, and fails. This bug might have been triggered by some state stored in a static variable. Even though some other thread might appear to have been unaffected by the conditions leading to failure, you cannot make this conclusion. Unless some property of your system – isolation in your language, isolation of the object root-sets exposed to independent threads, or something else – it’s safest to assume that anything other than tossing the entire address space out the window is risky and unreliable.

Thanks to the lightweight nature of Midori processes, abandoning a process was more like abandoning a single thread in a classical system than a whole process. But our isolation model let us do this reliably.

I’ll admit the scoping topic is a slippery slope. Maybe all the data in the world has become corrupt, so how do you know that tossing the process is even enough?! There is an important distinction here. Process state is transient by design. In a well designed system it can be thrown away and recreated on a whim. It’s true that a bug can corrupt persistent state, but then you have a bigger problem on your hands – a problem that must be dealt with differently.

For some background, we can look to fault-tolerant systems design. Abandonment (fail-fast) is already a common technique in that realm, and we can apply much of what we know about these systems to ordinary programs and processes. Perhaps the most important technique is regularly journaling and checkpointing precious persistent state. Jim Gray’s 1985 paper, Why Do Computers Stop and What Can Be Done About It?, describes this concept nicely. As programs continue moving to the cloud, and become aggressively decomposed into smaller independent services, this clear separation of transient and persistent state is even more important. As a result of these shifts in how software is written, abandonment is far more achievable in modern architectures than it once was. Indeed, abandonment can help you avoid data corruption, because bugs detected before the next checkpoint prevent bad state from ever escaping.

Bugs in Midori’s kernel were handled differently. A bug in the microkernel, for instance, is an entirely different beast than a bug in a user-mode process. The scope of possible damage was greater, and the safest response was to abandon an entire “domain” (address space). Thankfully, most of what you’d think of being classic “kernel” functionality – the scheduler, memory manager, filesystem, networking stack, and even device drivers – was run instead in isolated processes in user-mode where failures could be contained in the usual ways described above.

Bugs: Abandonment, Assertions, and Contracts

A number of kinds of bugs in Midori might trigger abandonment:

- An incorrect cast.

- An attempt to dereference a

nullpointer. - An attempt to access an array outside of its bounds.

- Divide-by-zero.

- An unintended mathematical over/underflow.

- Out-of-memory.

- Stack overflow.

- Explicit abandonment.

- Contract failures.

- Assertion failures.

Our fundamental belief was that each is a condition the program cannot recover from. Let’s discuss each one.

Plain Old Bugs

Some of these situations are unquestionably indicative of a program bug.

An incorrect cast, attempt to dereference

null, array out-of-bounds access, or divide-by-zero are clearly problems with the program’s logic, in that it attempted an undeniably illegal operation. As we will see later, there are ways out (e.g., perhaps you want NaN-style propagation for DbZ). But by default we assume it’s a bug.Most programmers were willing to accept this without question. And dealing with them as bugs this way brought abandonment to the inner development loop where bugs during development could be found and fixed fast. Abandonment really did help to make people more productive at writing code. This was a surprise to me at first, but it makes sense.

Some of these situations, on the other hand, are subjective. We had to make a decision about the default behavior, often with controversy, and sometimes offer programmatic control.

Arithmetic Over/Underflow

Saying an unintended arithmetic over/underflow represents a bug is certainly a contentious stance. In an unsafe system, however, such things frequently lead to security vulnerabilities. I encourage you to review the National Vulnerability Database to see the sheer number of these.

In fact, the Windows TrueType Font parser, which we ported to Midori (with gains in performance), has suffered over a dozen of them in the past few years alone. (Parsers tend to be farms for security holes like this.)

This has given rise to packages like SafeInt, which essentially moves you away from your native language’s arithmetic operations, in favor of checked library ones.

Most of these exploits are of course also coupled with an access to unsafe memory. You could reasonably argue therefore that overflows are innocuous in a safe language and therefore should be permitted. It’s pretty clear, however, based on the security experience, that a program often does the wrong thing in the face of an unintended over/underflow. Simply put, developers frequently overlook the possibility, and the program proceeds to do unplanned things. That’s the definition of a bug which is precisely what abandonment is meant to catch. The final nail in the coffin on this one is that philisophically, when there was any question about correctness, we tended to err on the side of explicit intent.

Hence, all unannotated over/underflows were considered bugs and led to abandonment. This was similar to compiling C# with the

/checkedswitch, except that our compiler aggressively optimized redundant checks away. (Since few people ever think to throw this switch in C#, the code-generators don’t do nearly as aggressive a job in removing the inserted checks.) Thanks to this language and compiler co-development, the result was far better than what most C++ compilers will produce in the face of SafeInt arithmetic. Also as with C#, theuncheckedscoping construct could be used where over/underflow was intended.Although the initial reactions from most C# and C++ developers I’ve spoken to about this idea are negative about it, our experience was that 9 times out of 10, this approach helped to avoid a bug in the program. That remaining 1 time was usually an abandonment sometime late in one of our 72 hour stress runs – in which we battered the entire system with browsers and multimedia players and anything else we could do to torture the system – when some harmless counter overflowed. I always found it amusing that we spent time fixing these instead of the classical way products mature through the stress program, which is to say deadlocks and race conditions. Between you and me, I’ll take the overflow abandonments!

Out-of-Memory and Stack Overflow

Out-of-memory (OOM) is complicated. It always is. And our stance here was certainly contentious also.

In environments where memory is manually managed, error code-style of checking is the most common approach:

X* x = (X*)malloc(...); if (!x) { // Handle allocation failure. }This has one subtle benefit: allocations are painful, require thought, and therefore programs that use this technique are often more frugal and deliberate with the way they use memory. But it has a huge downside: it’s error prone and leads to huge amounts of frequently untested code-paths. And when code-paths are untested, they usually don’t work.

Developers in general do a terrible job making their software work properly right at the edge of resource exhaustion. In my experience with Windows and the .NET Framework, this is where egregious mistakes get made. And it leads to ridiculously complex programming models, like .NET’s so-called Constrained Execution Regions. A program limping along, unable to allocate even tiny amounts of memory, can quickly become the enemy of reliability. Chris Brumme’s wondrous Reliability post describes this and related challenges in all its gory glory.

Parts of our system were of course “hardened” in a sense, like the lowest levels of the kernel, where abandonment’s scope would be necessarily wider than a single process. But we kept this to as little code as possible.

For the rest? Yes, you guessed it: abandonment. Nice and simple.

It was surprising how much of this we got away with. I attribute most of this to the isolation model. In fact, we could intentionally let a process suffer OOM, and ensuing abandonment, as a result of resource management policy, and still remain confident that stability and recovery were built in to the overall architecture.

It was possible to opt-in to recoverable failure for individual allocations if you really wanted. This was not common in the slightest, however the mechanisms to support it were there. Perhaps the best motivating example is this: imagine your program wants to allocate a buffer of 1MB in size. This situation is different than your ordinary run-of-the-mill sub-1KB object allocation. A developer may very well be prepared to think and explicitly deal with the fact that a contiguous block of 1MB in size might not be available, and deal with it accordingly. For example:

var bb = try new byte[1024*1024] else catch; if (bb.Failed) { // Handle allocation failure. }Stack overflow is a simple extension of this same philosophy. Stack is just a memory-backed resource. In fact, thanks to our asynchronous linked stacks model, running out of stack was physically identical to running out of heap memory, so the consistency in how it was dealt with was hardly surprising to developers. Many systems treat stack overflow this way these days.

Assertions

An assertion was a manual check in the code that some condition held true, triggering abandonment if it did not. As with most systems, we had both debug-only and release code assertions, however unlike most other systems, we had more release ones than debug. In fact, our code was peppered liberally with assertions. Most methods had multiple.

This kept with the philosophy that it’s better to find a bug at runtime than to proceed in the face of one. And, of course, our backend compiler was taught how to optimize them aggressively as with everything else. This level of assertion density is similar to what guidelines for highly reliable systems suggest. For example, from NASA’s paper, The Power of Ten -Rules for Developing Safety Critical Code:

Rule: The assertion density of the code should average to a minimum of two assertions per function. Assertions are used to check for anomalous conditions that should never happen in real-life executions. Assertions must always be side-effect free and should be defined as Boolean tests.

Rationale: Statistics for industrial coding efforts indicate that unit tests often find at least one defect per 10 to 100 lines of code written. The odds of intercepting defects increase with assertion density. Use of assertions is often also recommended as part of strong defensive coding strategy.

To indicate an assertion, you simply called

Debug.AssertorRelease.Assert:void Foo() { Debug.Assert(something); // Debug-only assert. Release.Assert(something); // Always-checked assert. }We also implemented functionality akin to

__FILE__and__LINE__macros like in C++, in addition to__EXPR__for the text of the predicate expression, so that abandonments due to failed assertions contained useful information.In the early days, we used different “levels” of assertions than these. We had three levels,

Contract.Strong.Assert,Contract.Assert, andContract.Weak.Assert. The strong level meant “always checked,” the middle one meant “it’s up to the compiler,” and the weak one meant “only checked in debug mode.” I made the controversial decision to move away from this model. In fact, I’m pretty sure 49.99% of the team absolutely hated my choice of terminology (Debug.AssertandRelease.Assert), but I always liked them because it’s pretty unambiguous what they do. The problem with the old taxonomy was that nobody ever knew exactly when the assertions would be checked; confusion in this area is simply not acceptable, in my opinion, given how important good assertion discipline is to the reliability of one’s program.As we moved contracts to the language (more on that soon), we tried making

asserta keyword too. However, we eventually switched back to using APIs. The primary reason was that assertions were not part of an API’s signature like contracts are; and given that assertions could easily be implemented as a library, it wasn’t clear what we gained from having them in the language. Furthermore, policies like “checked in debug” versus “checked in release” simply didn’t feel like they belonged in a programming language. I’ll admit, years later, I’m still on the fence about this.Contracts

Contracts were the central mechanism for catching bugs in Midori. Despite us beginning with Singularity, which used Sing#, a variant of Spec#, we quickly moved away to vanilla C# and had to rediscover what we wanted. We ultimately ended up in a very different place after living with the model for years.

All contracts and assertions were proven side-effect free thanks to our language’s understanding of immutability and side-effects. This was perhaps the biggest area of language innovation, so I’ll be sure to write a post about it soon.

As with other areas, we were inspired and influenced by many other systems. Spec# is the obvious one. Eiffel was hugely influential especially as there are many published case studies to learn from. Research efforts like Ada-based SPARK and proposals for realtime and embedded systems too. Going deeper into the theoretical rabbit’s hole, programming logic like Hoare’s axiomatic semantics provide the foundation for all of it. For me, however, the most philosophical inspiration came from CLU’s, and later Argus’s, overall approach to error handling.

Preconditions and Postconditions

The most basic form of contract is a method precondition. This states what conditions must hold for the method to be dispatched. This is most often used to validate arguments. Sometimes it’s used to validate the state of the target object, however this was generally frowned upon, since modality is a tough thing for programmers to reason about. A precondition is essentially a guarantee the caller provides to the callee.

In our final model, a precondition was stated using the

requireskeyword:void Register(string name) requires !string.IsEmpty(name) { // Proceed, knowing the string isn't empty. }A slightly less common form of contract is a method postcondition. This states what conditions hold after the method has been dispatched. This is a guarantee the callee provides to the caller.

In our final model, a postcondition was stated using the

ensureskeyword:void Clear() ensures Count == 0 { // Proceed; the caller can be guaranteed the Count is 0 when we return. }It was also possible to mention the return value in the postcondition, through the special name

return. Old values – such as necessary for mentioning an input in a post-condition – could be captured throughold(..); for example:int AddOne(int value) ensures return == old(value)+1 { ... }Of course, pre- and postconditions could be mixed. For example, from our ring buffer in the Midori kernel:

public bool PublishPosition() requires RemainingSize == 0 ensures UnpublishedSize == 0 { ... }This method could safely execute its body knowing that

RemainingSizeis0and callers could safely execute after the return knowing thatUnpublishedSizeis also0.If any of these contracts are found to be false at runtime, abandonment occurs.

This is an area where we differ from other efforts. Contracts have recently became popular as an expression of program logics used in advanced proof techniques. Such tools prove truths or falsities about stated contracts, often using global analysis. We took a simpler approach. By default, contracts are checked at runtime. If a compiler could prove truth or falsehood at compile-time, it was free to elide runtime checks or issue a compile-time error, respectively.

Modern compilers have constraint-based analyses that do a good job at this, like the range analysis I mentioned in my last post. These propagate facts and use them to optimize code already. This includes eliminating redundant checks: either explicitly encoded in contracts, or in normal program logic. And they are trained to perform these analyses in reasonable amounts of time, lest programmers switch to a different, faster compiler. The theorem proving techniques simply did not scale for our needs; our core system module took over a day to analyze using the best in breed theorem proving analysis framework!

Furthermore, the contracts a method declared were part of its signature. This meant they would automatically show up in documentation, IDE tooltips, and more. A contract was as important as a method’s return and argument types. Contracts really were just an extension of the type system, using arbitrary logic in the language to control the shape of exchange types. As a result, all the usual subtyping requirements applied to them. And, of course, this facilitated modular local analysis which could be done in seconds using standard optimizing compiler techniques.

90-something% of the typical uses of exceptions in .NET and Java became preconditions. All of the

ArgumentNullException,ArgumentOutOfRangeException, and related types and, more importantly, the manual checks andthrows were gone. Methods are often peppered with these checks in C# today; there are thousands of these in .NET’s CoreFX repo alone. For example, here isSystem.IO.TextReader’sReadmethod:/// <summary> /// ... /// </summary> /// <exception cref="ArgumentNullException">Thrown if buffer is null.</exception> /// <exception cref="ArgumentOutOfRangeException">Thrown if index is less than zero.</exception> /// <exception cref="ArgumentOutOfRangeException">Thrown if count is less than zero.</exception> /// <exception cref="ArgumentException">Thrown if index and count are outside of buffer's bounds.</exception> public virtual int Read(char[] buffer, int index, int count) { if (buffer == null) { throw new ArgumentNullException("buffer"); } if (index < 0) { throw new ArgumentOutOfRangeException("index"); } if (count < 0) { throw new ArgumentOutOfRangeException("count"); } if (buffer.Length - index < count) { throw new ArgumentException(); } ... }This is broken for a number of reasons. It’s laboriously verbose, of course. All that ceremony! But we have to go way out of our way to document the exceptions when developers really ought not to ever catch them. Instead, they should find the bug during development and fix it. All this exception nonsense encourages very bad behavior.

If we use Midori-style contracts, on the other hand, this collapses to:

/// <summary> /// ... /// </summary> public virtual int Read(char[] buffer, int index, int count) requires buffer != null requires index >= 0 requires count >= 0 requires buffer.Length - index >= count { ... }There are a few appealing things about this. First, it’s more concise. More importantly, however, it self-describes the contract of the API in a way that documents itself and is easy to understand by callers. Rather than requiring programmers to express the error condition in English, the actual expressions are available for callers to read, and tools to understand and leverage. And it uses abandonment to communicate failure.

I should also mention we had plenty of contracts helpers to help developers write common preconditions. The above explicit range checking is very messy and easy to get wrong. Instead, we could have written:

public virtual int Read(char[] buffer, int index, int count) requires buffer != null requires Range.IsValid(index, count, buffer.Length) { ... }And, totally aside from the conversation at hand, coupled with two advanced features – arrays as slices and non-null types – we could have reduced the code to the following, while preserving the same guarantees:

public virtual int Read(char[] buffer) { ... }But I’m jumping way ahead …

Humble Beginnings

Although we landed on the obvious syntax that is very Eiffel- and Spec#-like – coming full circle – as I mentioned earlier, we really didn’t want to change the language at the outset. So we actually began with a simple API approach:

public bool PublishPosition() { Contract.Requires(RemainingSize == 0); Contract.Ensures(UnpublishedSize == 0); ... }There are a number of problems with this approach, as the .NET Code Contracts effort discovered the hard way.

First, contracts written this way are part of the API’s implementation, whereas we want them to be part of the signature. This might seem like a theoretical concern but it is far from being theoretical. We want the resulting program to contain built-in metadata so tools like IDEs and debuggers can display the contracts at callsites. And we want tools to be in a position to auto-generate documentation from the contracts. Burying them in the implementation doesn’t work unless you somehow disassemble the method to extract them later on (which is a hack).

This also makes it tough to integrate with a backend compiler which we found was necessary for good performance.

Second, you might have noticed an issue with the call to

Contract.Ensures. SinceEnsuresis meant to hold on all exit paths of the function, how would we implement this purely as an API? The answer is, you can’t. One approach is rewriting the resulting MSIL, after the language compiler emitted it, but that’s messy as all heck. At this point, you begin to wonder, why not simply acknowledge that this is a language expressivity and semantics issue, and add syntax?Another area of perpetual struggle for us was whether contracts are conditional or not. In many classical systems, you’d check contracts in debug builds, but not the fully optimized ones. For a long time, we had the same three levels for contracts that we did assertions mentioned earlier:

- Weak, indicated by

Contract.Weak.*, meaning debug-only. - Normal, indicated simply by

Contract.*, leaving it as an implementation decision when to check them. - Strong, indicated by

Contract.Strong.*, meaning always checked.

I’ll admit, I initially found this to be an elegant solution. Unfortunately, over time we found that there was constant confusion about whether “normal” contracts were on in debug, release, or all of the above (and so people misused weak and strong accordingly). Anyway, when we began integrating this scheme into the language and backend compiler toolchain, we ran into substantial issues and had to backpedal a little bit.

First, if you simply translated

Contract.Weak.Requirestoweak requiresandContract.Strong.Requirestostrong requires, in my opinion, you end up with a fairly clunky and specialized language syntax, with more policy than made me comfortable. It immediately calls out for parameterization and substitutability of theweak/strongpolicies.Next, this approach introduces a sort of new mode of conditional compilation that, to me, felt awkward. In other words, if you want a debug-only check, you can already say something like:

#if DEBUG requires X #endifFinally – and this was the nail in the coffin for me – contracts were supposed to be part of an API’s signature. What does it even mean to have a conditional contract? How is a tool supposed to reason about it? Generate different documentation for debug builds than release builds? Moreover, as soon as you do this, you lose a critical guarantee, which is that code doesn’t run if its preconditions aren’t met.

As a result, we nuked the entire conditional compilation scheme.

We ended up with a single kind of contract: one that was part of an API’s signature and checked all the time. If a compiler could prove the contract was satisfied at compile-time – something we spent considerable energy on – it was free to elide the check altogether. But code was guaranteed it would never execute if its preconditions weren’t satisfied. For cases where you wanted conditional checks, you always had the assertion system (described above).

I felt better about this bet when we deployed the new model and found that lots of people had been misusing the “weak” and “strong” notions above out of confusion. Forcing developers to make the decision led to healthier code.

Future Directions

A number of areas of development were at varying stages of maturity when our project wound down.

Invariants

We experimented a lot with invariants. Anytime we spoke to someone versed in design-by-contract, they were borderline appalled that we didn’t have them from day one. To be honest, our design did include them from the outset. But we never quite got around to finishing the implementation and deploying it. This was partly just due to engineering bandwidth, but also because some difficult questions remained. And honestly the team was almost always satisfied with the combination of pre- and post-conditions plus assertions. I suspect that in the fullness of time we’d have added invariants for completeness, but to this day some questions remain for me. I’d need to see it in action for a while.

The approach we had designed was where an

invariantbecomes a member of its enclosing type; for example:public class List<T> { private T[] array; private int count; private invariant index >= 0 && index < array.Length; ... }Notice that the

invariantis markedprivate. An invariant’s accessibility modifier controlled which members the invariant was required to hold for. For example, apublic invariantonly had to hold at the entry and exit of functions withpublicaccessibility; this allowed for the common pattern ofprivatefunctions temporarily violating invariants, so long aspublicentrypoints preserved them. Of course, as in the above example, a class was free to declare aprivate invarianttoo, which was required to hold at all function entries and exits.I actually quite liked this design, and I think it would have worked. The primary concern we all had was the silent introduction of checks all over the place. To this day, that bit still makes me nervous. For example, in the

List<T>example, you’d have theindex >= 0 && index < array.Lengthcheck at the beginning and end of every single function of the type. Now, our compiler eventually got very good at recognizing and coalescing redundant contract checks; and there were ample cases where the presence of a contract actually made code quality better. However, in the extreme example given above, I’m sure there would have been a performance penalty. That would have put pressure on us changing the policy for when invariants are checked, which would have possibly complicated the overall contracts model.I really wish we had more time to explore invariants more deeply. I don’t think the team sorely missed not having them – certainly I didn’t hear much complaining about their absence (probably because the team was so performance conscious) – but I do think invariants would have been a nice icing to put on the contracts cake.

Advanced Type Systems

I always liked to say that contracts begin where the type system leaves off. A type system allows you to encode attributes of variables using types. A type limits the expected range values that a variable might hold. A contract similarly checks the range of values that a variable holds. The difference? Types are proven at compile-time through rigorous and composable inductive rules that are moderately inexpensive to check local to a function, usually, but not always, aided by developer-authored annotations. Contracts are proven at compile-time where possible and at runtime otherwise, and as a result, permit far less rigorous specification using arbitrary logic encoded in the language itself.

Types are preferable, because they are guaranteed to be compile-time checked; and guaranteed to be fast to check. The assurances given to the developer are strong and the overall developer productivity of using them is better.

Limitations in a type system are inevitable, however; a type system needs to leave some wiggle room, otherwise it quickly grows unwieldly and unusable and, in the extreme, devolves into bi-value bits and bytes. On the other hand, I was always disappointed by two specific areas of wiggle room that required the use of contracts:

- Nullability.

- Numeric ranges.

Approximately 90% of our contracts fell into these two buckets. As a result, we seriously explored more sophisticated type systems to classify the nullability and ranges of variables using the type system instead of contracts.

To make it concrete, this was the difference between this code which uses contracts:

public virtual int Read(char[] buffer, int index, int count) requires buffer != null requires index >= 0 requires count >= 0 requires buffer.Length - index < count { ... }And this code which didn’t need to, and yet carried all the same guarantees, checked statically at compile-time:

public virtual int Read(char[] buffer) { ... }Placing these properties in the type system significantly lessens the burden of checking for error conditions. Lets say that for any given 1 producer of state there are 10 consumers. Rather than having each of those 10 defend themselves against error conditions, we can push the responsibility back onto that 1 producer, and either require a single assertion that coerces the type, or even better, that the value is stored into the right type in the first place.

Non-Null Types

The first one’s really tough: guaranteeing statically that variables do not take on the

nullvalue. This is what Tony Hoare has famously called his “billion dollar mistake”. Fixing this for good is a righteous goal for any language and I’m happy to see newer language designers tackling this problem head-on.Many areas of the language fight you every step of the way on this one. Generics, zero-initialization, constructors, and more. Retrofitting non-null into an existing language is tough!

The Type System

In a nutshell, non-nullability boiled down to some simple type system rules:

- All unadorned types

Twere non-null by default. - Any type could be modified with a

?, as inT?, to mark it nullable. nullis an illegal value for variables of non-null types.Timplicitly converts toT?. In a sense,Tis a subtype ofT?(although not entirely true).- Operators exist to convert a

T?to aT, with runtime checks that abandoned onnull.

Most of this is probably “obvious” in the sense that there aren’t many choices. The name of the game is systematically ensuring all avenues of

nullare known to the type system. In particular, nonullcan ever “sneakily” become the value of a non-nullTtype; this meant addressing zero-initialization, perhaps the hardest problem of all.The Syntax

Syntactically, we offered a few ways to accomplish #5, converting from

T?toT. Of course, we discouraged this, and preferred you to stay in “non-null” space as long as possible. But sometimes it’s simply not possible. Multi-step initialization happens from time to time – especially with collections data structures – and had to be supported.Imagine for a moment we have a map: